It’s important for publishers to understand what toxicity is—and isn’t—because the health of your community depends on it. Your users deserve a safe and civil environment to exchange ideas without the fear of toxicity taking over.

Quality conversations are essential for sustainability: they lead to productive discourse that keeps users engaged and spending more time on your website.

The good news? Publishers can create their own rules for their community and use moderation technology to remove toxicity and create quality conversations.

But toxicity isn’t always easy to spot in the wild. So, in this post, let’s define what toxicity is and take a closer look at a few different types of toxicity.

What is online toxicity?

First, let’s address what toxicity isn’t: toxicity is not the same negativity. Arguments and discord happen in every community—but in a community with moderation, you can be sure that those arguments are healthy. In fact, they help move the conversation forward.

Toxicity has the opposite effect. According to Perspective, toxicity is a rude, disrespectful, or unreasonable comment that is likely to make people leave a discussion. Many forms of toxicity are obvious, including racism, hate speech, harassment, profane language, and threats. But some are not so obvious

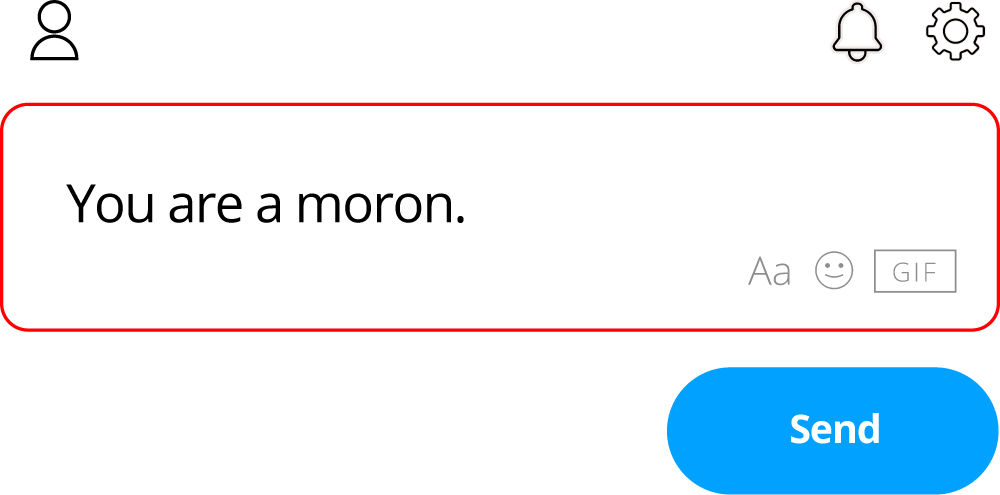

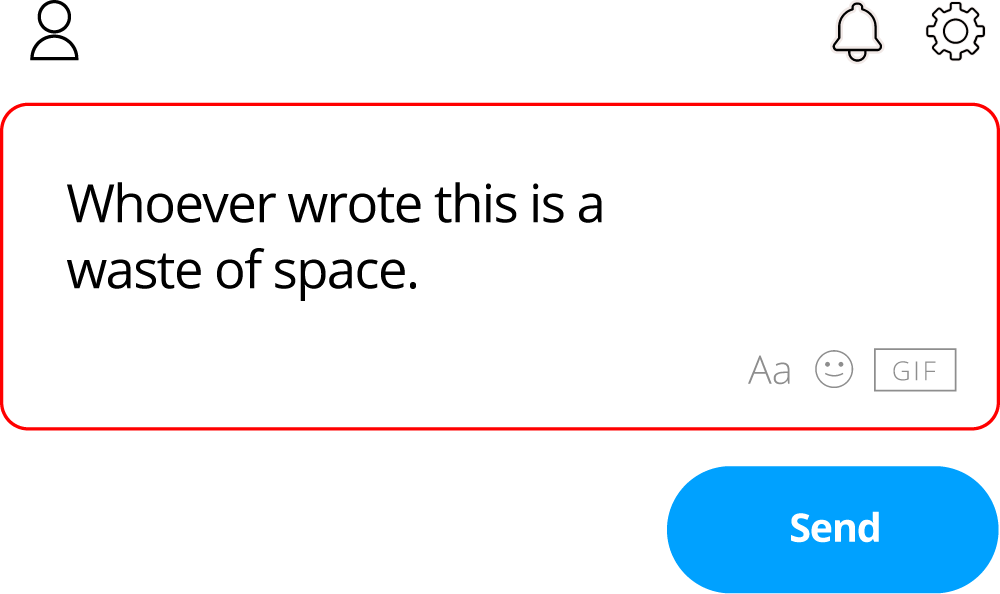

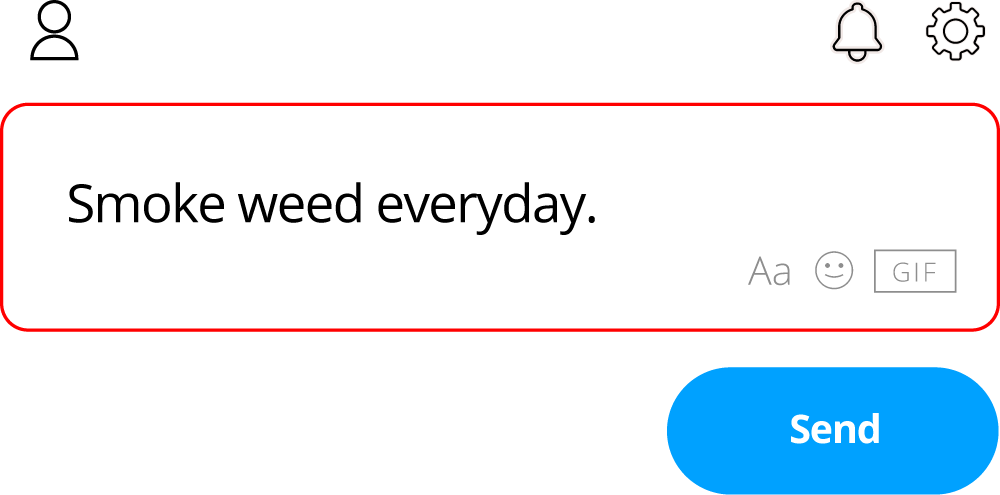

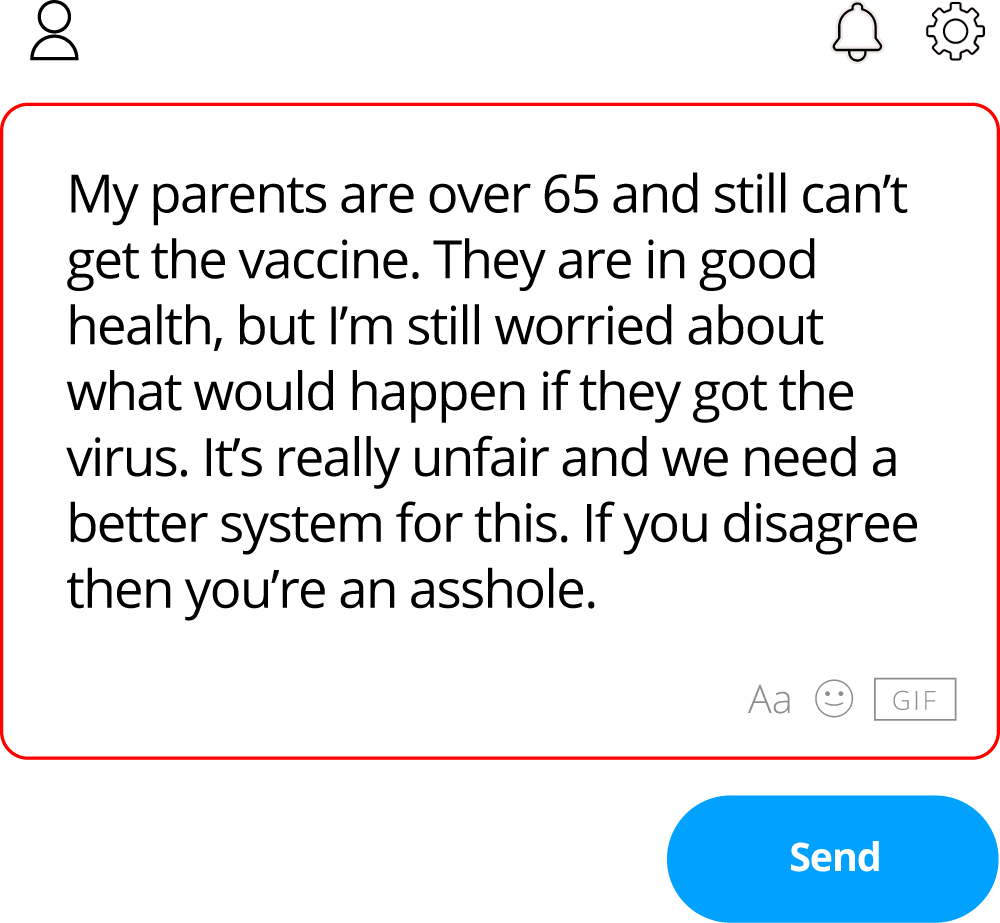

Can you guess what makes these comments toxic?

Toxicity can be a moving target: human language is nuanced and constantly evolving. That’s why AI-driven moderation that can adapt to those changes, along with human review, is an essential part of any effective multi layered moderation process. With that in mind, let’s take a look at just a few of the common types of toxic comments within a publisher’s community.

Attack on commenter: A comment doesn’t have to contain profanity to be toxic. This example is an attack on another commenter—it doesn’t help move the conversation forward.

Attack on author: Readers can leave productive feedback for journalists. What they can’t do is attack the author.

Off-topic: Unless this commenter intended to suggest a glaucoma treatment they personally found effective in response to an article on medical marijuana, comments that are off-topic don’t advance the conversation.

Off-topic: There’s nothing wrong with debating politics. However, context is important. If this comment was posted on an article about a vacuum cleaner, it’s off-topic.

Obscene: Even if most of a comment is perfectly reasonable, an obscene word at the end can render it toxic.

Your community, your rules: quality conversations require moderation

Publisher-hosted communities can be safe and civil environments where all voices can be heard, without the fear of toxicity taking over. Ultimately, publishers decide what is and what isn’t permissible in their community: the role of moderation technology is to remove toxicity and enforce the publisher’s rules. Learn more about how moderation creates quality conversations online