Aida is OpenWeb’s LLM-based moderation technology. Aida is in open beta and all OpenWeb partners can enroll — join here.

Online conversations have been putting moderation systems across the web to the test. It’s important that people can use the web to express themselves — but we need a way to balance freedom of expression with safety online.

Meet Aida

Aida — short for Artificial Intelligence moDeration Agent — is OpenWeb’s Large Language Model-based technology (LLM) trained to keep conversations on the open internet healthy, constructive, engaging, and suitable.

Internal and partner tests have shown that Aida’s decision-making has 95% accuracy (almost double the industry benchmark of 50% accuracy), has outperformed manual moderation in all real-world environments, and so can reduce the need for manual moderation by 90%. More detail on these numbers below.

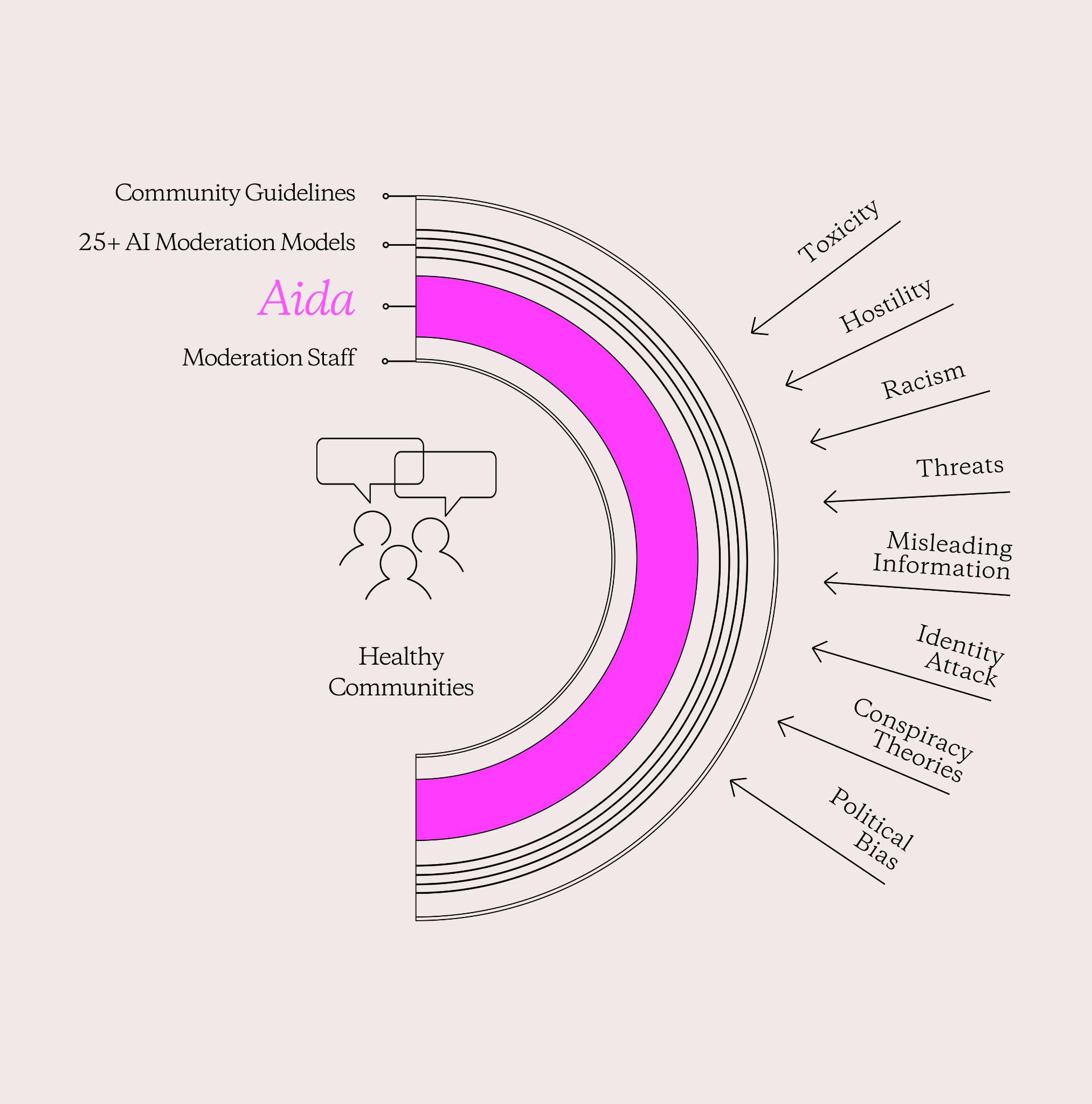

Aida brings new levels of automated precision and nuance to moderating online conversations, sitting as an additional line of defense in our moderation system. When our 25+ AI models flag something they have questions about, Aida steps in.

Today, we’re proud to announce that Aida is available to OpenWeb partners in an open beta. Any and all OpenWeb partners can use Aida — simply fill out this request form and we’ll be in touch.

So far, the results have been incredible. Let’s dive into it.

What is Aida, exactly?

As above, early tests have had incredible results. We’re working on a full write up that will dive deeper into those results (and include our methodology. This is something to be truly excited about if safety on the open internet matters to you.

But that’s not all. Aida also:

- Recognizes nuance where it matters the most: Our partner tests have shown understanding of nuanced issues that often elude even trained moderation staff. This includes comments that are transphobic, anti-lgbtq+, political conspiracy, and dog whistles that escape other models.

- Never stops learning: Aida’s knowledge grows and adapts the more it reviews content and comments. This dynamic capability continuously optimizes the way Aida evaluates and makes accurate moderation outcomes.

- Dramatically reduces response times: Comments in the queue are moderated at an unprecedented response time.

How Aida Works

To make a moderation decision on a given comment, Aida couples that knowledge base with additional context from the comment’s thread, the title and topic of the article, and the tone and sentiment of the content.

Comments that reach Aida are first flagged by OpenWeb’s initial moderation layer, a set of over 25 AI/ML models spanning subjects from hostility to inappropriate emojis.

Of course, human moderators are still in the loop in order to continuously improve the quality of the algorithm. Aida doesn’t replace the need for moderation staff, but rather allows them to focus their efforts toward assuring the quality of moderation decisions and other community management tasks.

Join the Open Beta

This step forward for our moderation system is a key asset that is helping our partners maintain community health and trust during important, major cultural moments, including divisive ones like the 2024 US Presidential election.

Aida is now in open beta and deployed across 300 partners worldwide.

If you’re interested in trying Aida out, click here.